I find Blu-ray too compressed. The original standard anyway. I havent seen the 4k ones. I got really distracted by the colour banding.Which is why I prefer to watch on BluRay, which is why PS5 needs a disc drive :s

Streaming is simply the ugly last choice.

You just linked a compressed stream lol.xDriver69x

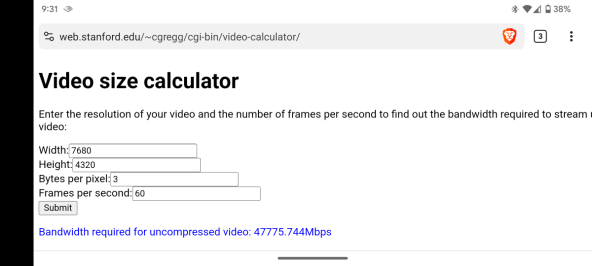

Would you like to wager? Or shall I just help educate. There are RAW 8K video cameras, and have been for many years. What you think and what is might not be the same thing.

Last edited: